Is our security becoming a battle of AIs?

|

With parallels to the harnessing of nuclear fission back in the 1940s, AI seems to be reaching a tipping point, radically changing the nature of conflict and potentially altering the balance between attackers and defenders.

Already used extensively in the optimization of data analysis, logistics and decision-making, AI has pervaded all sectors – including military. On the battlefield, the most prominent use so far, recently demonstrated to devastating effect in Russia and Ukraine, has been in unmanned aerial vehicles (UAVs). These range from quadcopters that serve as kamikaze-like munitions as well as delivery systems capable of firing multiple missiles and returning for resupply like piloted aircraft.

Once purely the stuff of science fiction, the prospect of future battles being fought between robotically augmented human warriors and their supporting drones (either individually or in swarms) now seems not only plausible but likely. Certainly, AI is set to play an increasingly important role in future conflict and heralds a likely revolution in military affairs.

Unsurprisingly, the influence of AI in cyberwarfare promises to be greater still and possibly to be evolving even faster.

The rise of GenAI

The explosion of AI into public awareness is mostly due to the recent emergence of powerful generative AI (GenAI), enabled by advances in processing speed and deep learning models that enable GenAI to be increasingly powerful and affordable.

However, in addition to GenAI, we see continued advances in discriminative/predictive AIs that are central to both AI-powered automation and cybersecurity. Predictive AI has been a cornerstone of cyberthreat research and response for over a decade, and FortiGuard Labs (Fortinet's threat research organisation), derives actionable threat intelligence from the billions of pieces of security data it collects every day.

But while discriminative AI continues to play a vital role in cyber-defence, the revolution in GenAI is a tide that raises all boats – both friendly and foe.

Cyberthreats increase in volume and variety

Unlike discriminative AI, which focuses on predicting what category a new piece of data belongs to, GenAI can synthesize entirely novel data including text, images and, most significantly in this context, computer code. As such, GenAI is poised to democratize the development of software, increasing the number of vulnerabilities malicious actors exploit from its current level of less than 5 per cent of the total and broadening the variety and diversity of attacks. Furthermore, GenAI can generate code much faster than human programmers, potentially shrinking the time window between public disclosure of new vulnerabilities and the emergence of malware to exploit them.

So, while customers might typically have had days or even weeks to apply vendor-created patches or workarounds for newly discovered vulnerabilities, with GenAI, we may soon see exploits in the wild within hours or even minutes. Furthermore, attackers can use GenAI to quickly analyse new patches and security response tactics and produce new variants to evade or defeat them.

In addition to its use in the coding of exploits, GenAI is also being used to increase the credibility of phishing and other social engineering attacks, creating ever-more convincing text, voice, and even deep-fake video interactions with victims.

Even the reconnaissance phase of cybercrime (identifying the most lucrative potential victims and mapping their networks) is being transformed by AI, increasing the breadth, speed, and accuracy of pre-attack examination and assessment of potential targets.

|

Intelligent, automated cybersecurity – is it enough?

Of course, as well as enabling malicious cyber actors to search for vulnerabilities, AI is being used to find bugs and mitigate potential security flaws in legitimate software. This is especially important where developers make use of open-source and other third party software modules in their software supply chains.

As a result, an increasing number of cybersecurity solutions, including those from Fortinet, already use AI to assist in identifying vulnerabilities and prioritising their resolution. Similarly, with the volume of security data expanding as the number of digitally connected devices and applications continues to grow, security teams that are already thinly stretched due to the global skills shortage can leverage AI-powered automation to interpret the torrent of raw data from across their networks and determine and execute the most appropriate response to anomalous or malicious activity.

Traditional stove-piped cybersecurity solutions, point products designed to protect against specific categories of threat, often fail to stop today's more sophisticated multi-vector attacks. Consequently, solutions that make use of AI to help correlate indicators of compromise from multiple sources of data and integrate defensive activity across disparate security solutions are better able to deal with AI-powered attacks.

However, despite the clear and numerous advantages of AI-powered cybersecurity, there is another, more insidious problem lurking below the surface. Even if patches for known software vulnerability exploits are made available to users of those products, patches must be applied to be effective. The term “N-day vulnerability” refers to the number of days (N) since a vulnerability was disclosed and a patch was released, and many users failed to apply patches in a timely fashion–or even at all. An attacker doesn't need to develop a novel (Zero Day) exploit when lucrative targets fail to apply existing patches to resolve known vulnerabilities. AI can help enterprises with vulnerability management, identifying vulnerabilities and even applying patches, but too few organisations currently avail themselves of such tools.

The road ahead

The AI genie is out of the bottle and will change our world forever. To harness its power for good, optimising autonomy and automation on the digital battlefield with strong, adaptive cybersecurity will be essential. But only through collaboration between industry, governments and other public bodies can we counter the scale and complexity of today's AI-powered threat landscape.

Companies like Fortinet continue to innovate and deploy AI-enhanced solutions commercially, enabling governments to focus on the technically challenging and fast-moving national security challenges of AI-powered automation while leveraging commercial cybersecurity capabilities.

This means choosing partners with a track record of AI innovation (both generative and predictive) and who are committed to “Secure by Design”, the belief that strong security must be integral to the entire lifecycle of IT products and services, from initial design to final deployment.

| Rising data security budgets fail to curb insider criticism Organizations worldwide are boosting investment in safeguarding sensitive information, but insider threats remain a costly weak spot. |

| AI adoption in cybersecurity surges across Vietnam Fortinet has announced the findings of a 2025 IDC survey highlighting how organizations across Vietnam are adopting AI as the front line of their cyber defense strategy. |

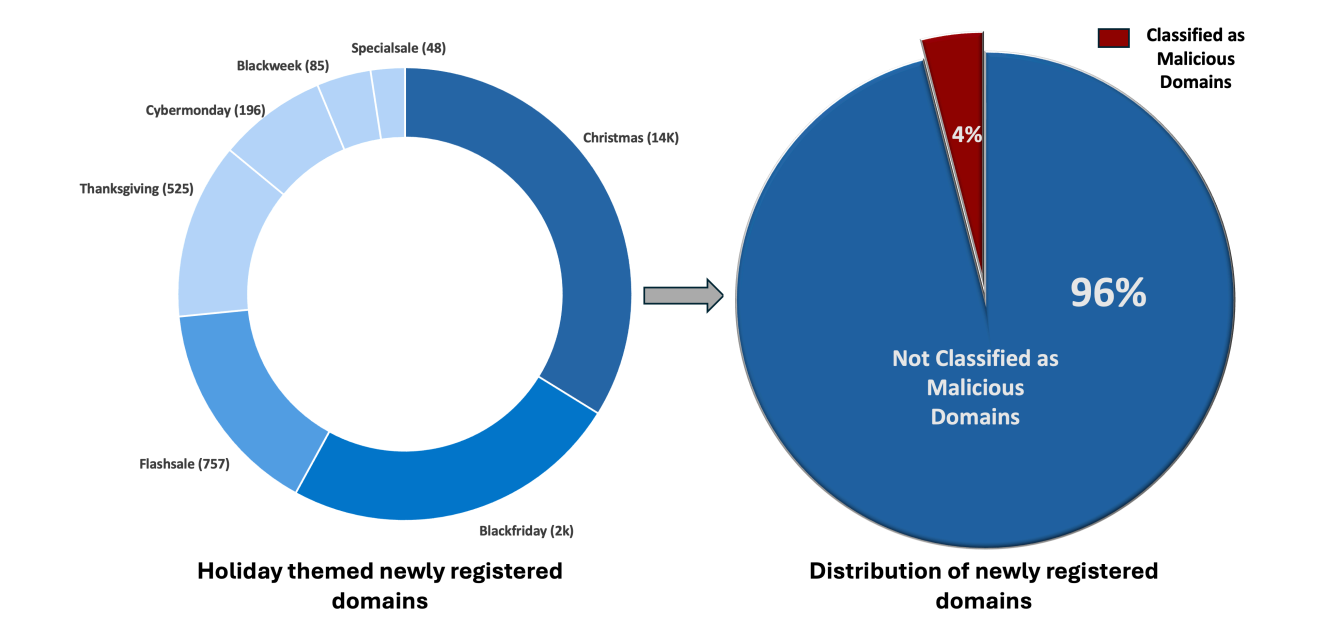

| Cyberthreats targeting the 2025 holiday season FortiGuard has analyzed data from the past three months to identify the most significant patterns shaping the 2025 holiday cyber-threat risk. |

What the stars mean:

★ Poor ★ ★ Promising ★★★ Good ★★★★ Very good ★★★★★ Exceptional

Tag:

Tag:

Related Contents

Latest News

More News

- Vietnam forest protection initiative launched (February 07, 2026 | 09:00)

- China buys $1.5bn of Vietnam farm produce in early 2026 (February 06, 2026 | 20:00)

- Vietnam-South Africa strategic partnership boosts business links (February 06, 2026 | 13:28)

- Mondelez Kinh Do renews the spirit of togetherness (February 06, 2026 | 09:35)

- Seafood exports rise in January (February 05, 2026 | 17:31)

- Accelerating digitalisation of air traffic services in Vietnam (February 05, 2026 | 17:30)

- Ekko raises $4.2 million to improve employee retention and financial wellbeing (February 05, 2026 | 17:28)

- Dassault Systèmes and Nvidia to build platform powering virtual twins (February 04, 2026 | 08:00)

- The PAN Group acquires $56 million in after-tax profit in 2025 (February 03, 2026 | 13:06)

- Young entrepreneurs community to accelerate admin reform (February 03, 2026 | 13:04)

Mobile Version

Mobile Version