Tech world looking to new developments at AWS re:Invent 2024

The event is expected to attract about 65,000 businesses, tech experts, startups, customers, and innovators offline and tens of thousands online. They are there to hear real stories from customers and AWS leaders about navigating pressing topics, like generative AI, learn about new product launches, watch demos, and get behind-the-scenes insights during keynotes.

|

| Matt Garman, CEO of AWS, made the keynote at re:Invent 2024 in Las Vegas. Photo: BT |

Making a speech at the event on December 3, Matt Garman, CEO of AWS, talked about how AWS is innovating across every aspect of the world’s leading cloud. He explored how the company is reinventing foundational building blocks as well as developing new experiences, all to empower customers and partners with what they need to build a better future.

This is the first re:Invent as CEO for Garman, who was promoted to replace Adam Selipsky in June.

New chapter begins

“This is my first event as CEO, but it’s not my first re:Invent. I've actually had the privilege to be at every re:Invent since 2012. Now 13 years into this event, a lot has changed, but what hasn’t is what makes re:Invent so special – bringing together the passionate, energetic AWS community to learn from each other,” he said.

According to the CEO, businesses worldwide are rapidly embracing cloud technologies to drive innovation and revenue growth. Customers are moving workloads to the cloud to capitalise on the latest advancements.

“We have more instances, more capabilities, and more compute than any other cloud,” he said. While GenAI captures headlines, Garman emphasised that AWS remains deeply committed to innovating its foundational services – compute, storage, and databases.

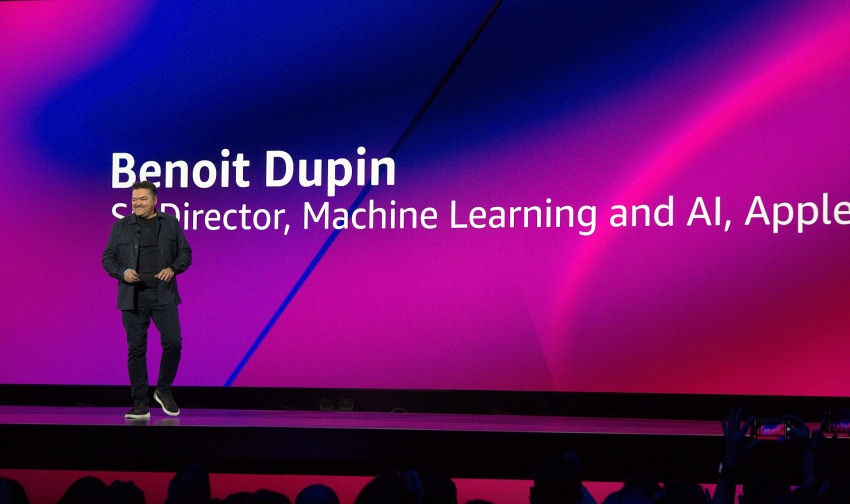

During the event, leading partners shared their journey with AWS and the improvements their business have made. Benoit Dupin, Apple’s senior director of machine learning and AI, talked about Apple’s extensive use of Amazon services for products and services.

|

| Benoit Dupin, Apple’s senior director of machine learning and AI. Photo: AWS |

For over a decade, Apple has relied on AWS to power many of its cloud-based services. Now, this collaboration is fuelling the next generation of intelligent features for Apple users worldwide, according to Dupin.

One of the latest chapters of this collaboration is Apple Intelligence, a powerful set of features integrated across iPhone, iPad, and Mac devices. This personal intelligence system understands users and helps them work, communicate, and express themselves in new and exciting ways.

Beyond Apple Intelligence, Apple has found many other benefits leveraging the latest AWS services. By moving from x86 and G4 instances to Graviton and Inferentia2 respectively, Apple has achieved over 40 per cent efficiency gains in some of its machine learning services.

“AWS’ services have been instrumental in supporting our scale and growth,” Dupin said. “And, most importantly, in delivering incredible experiences for our users.”

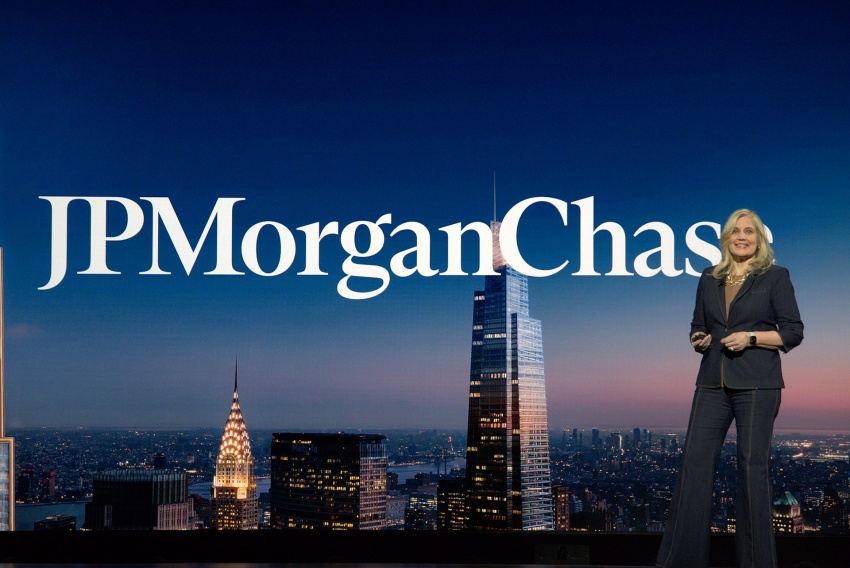

JPMorgan Chase CEO Lori Beer told the re:Invent audience the company is using AWS to unlock business value, as well as open up new opportunities with GenAI.

|

| JPMorgan Chase CEO Lori Beer at re:Invent 2024. Photo: AWS |

More than 5,000 data scientists at JPMC use Amazon SageMaker every month to develop AI models to build a platform that improves various banking services. This could potentially reduce customer waiting times and improve customer service quality. Beer said JPMC is exploring Amazon Bedrock's large language models to enable new services, such as AI-assisted trip planning for customers, or to help bankers generate personalised outreach ideas to better engage with customers.

In 2022, JPMorganChase started using AWS Graviton chips and saw increased performance benefits. By 2023, the company had nearly 1,000 applications running on AWS, including core services like deposits and payments. The company’s decision to build its data management platform Fusion on AWS is helping it quickly process large amounts of market information – enabling JPMC customers to analyse market trends faster.

Elsewhere, PagerDuty, a global leader in digital operations management, is using AWS to help its customers, including banks, media streaming services, and retailers, employ GenAI which helps them better respond, recover, and learn from service and operational incidents such as unplanned downtime.

New key announcements

At re:Invent 2024, AWS announced innovations across its core services, pushing these foundational components optimised for Gen AI workloads, data-intensive applications, and distributed computing environments.

|

| AWS CEO Matt Garman announced new capabilities for Amazon Bedrock. Photo: AWS |

During his re:Invent keynote, AWS CEO Garman announced a host of new capabilities for Amazon Bedrock that will help customers solve some of the top challenges the entire industry is facing when moving GenAI applications to production, while opening it up to new use cases that demand the highest levels of precision.

He also announced new capabilities for Amazon Aurora and Amazon DynamoDB to support customers’ most demanding workloads that need to operate across multiple regions with strong consistency, low latency, and the highest availability.

AWS also announced new features for Amazon Simple Storage Service (Amazon S3) that make it the first cloud object store with fully managed support for Apache Iceberg with faster analytics, and the easiest way to store and manage tabular data at any scale. These new features also include the ability to automatically generate queryable metadata, simplifying data discovery and understanding to help customers unlock the value of their data in S3.

Moreover, AWS announced Trainium3, its next-generation AI chip, that will allow customers to build bigger models faster and deliver superior real-time performance when deploying them. It will be the first AWS chip made with a 3-nanometer process node, setting a new standard for performance, power efficiency, and density. The first Trainium3-based instances are expected to be available in late 2025.

|

| Photo: AWS |

Garman also unveiled the next generation of Amazon Sagemaker, bringing together the capabilities customers need for fast Structured Query Language analytics, petabyte-scale big data processing, data exploration and integration, machine learning (ML) model development and training, and GenAI on one integrated platform.

Other new capabilities include the new Amazon EC2 Trn2 instances, powered by AWS Trainium2 chips, purpose built for high-performance deep learning training of generative AI models, including large language models (LLMs) and latent diffusion models.

Amazon Q Business, new capabilities for Amazon Q Developer and Amazon Q were among others also highlighted.

Peter DeSantis, senior vice president of AWS Utility Computing, took to the stage in his keynote the night before on December 2 when he also announced AWS’ continuing tradition of diving deep into the engineering that powers AWS services.

|

| Peter DeSantis, senior vice president of AWS Utility Computing. Photo: AWS |

DeSantis said that the specific needs of AI training and inference workloads represent a new opportunity for AWS teams to invent in entirely different ways, from developing the highest performing chips to interconnecting them with novel technology.

According to DeSantis, one of the unique things about AWS is the amount of time its leaders take getting into the details, so they know what’s happening with customers and can make fast, impactful decisions. One such decision, he said, was to start investing in custom silicon 12 years ago, a move that altered the course of the company’s story in the process.

He told the audience that tonight was “the next chapter” in how AWS is innovating across the entire technology stack to bring customers truly differentiated offerings.

| Strong security culture is key for GenAI era As generative AI ushers in new capabilities that were unimaginable even a few years ago, businesses are quickly leveraging technology advancements to delight customers and capture new market share. Eric Yeo Country general manager at AWS Vietnam, shares his insight. |

| AWS announces Generative AI Partner Innovation Alliance Amazon Web Services (AWS), a subsidiary of Amazon.com, Inc., announced on November 4 the launch of the Generative AI Partner Innovation Alliance. |

| Mesolitica builds Malaysian large language model for Gen AI assistants on AWS Amazon Web Services (AWS) announced that Mesolitica, a Malaysian startup specialising in training large language models (LLMs), has built a Malaysian language GenAI LLM on the world’s leading cloud. |

| BOTNOI builds multilingual GenAI assistant customised for Southeast Asia on AWS Amazon Web Services (AWS) announced on December 2 that BOTNOI, a Thai generative AI startup specialising in conversational AI virtual assistants, has built a text-to-speech and speech-to-text AI platform named BOTNOI Voice on AWS. |

What the stars mean:

★ Poor ★ ★ Promising ★★★ Good ★★★★ Very good ★★★★★ Exceptional

Themes: Digital Transformation

- Dassault Systèmes and Nvidia to build platform powering virtual twins

- Sci-tech sector sees January revenue growth of 23 per cent

- Advanced semiconductor testing and packaging plant to become operational in 2027

- BIM and ISO 19650 seen as key to improving project efficiency

- Viettel starts construction of semiconductor chip production plant

Related Contents

Latest News

More News

- Vietnam forest protection initiative launched (February 07, 2026 | 09:00)

- China buys $1.5bn of Vietnam farm produce in early 2026 (February 06, 2026 | 20:00)

- Vietnam-South Africa strategic partnership boosts business links (February 06, 2026 | 13:28)

- Mondelez Kinh Do renews the spirit of togetherness (February 06, 2026 | 09:35)

- Seafood exports rise in January (February 05, 2026 | 17:31)

- Accelerating digitalisation of air traffic services in Vietnam (February 05, 2026 | 17:30)

- Ekko raises $4.2 million to improve employee retention and financial wellbeing (February 05, 2026 | 17:28)

- Dassault Systèmes and Nvidia to build platform powering virtual twins (February 04, 2026 | 08:00)

- The PAN Group acquires $56 million in after-tax profit in 2025 (February 03, 2026 | 13:06)

- Young entrepreneurs community to accelerate admin reform (February 03, 2026 | 13:04)

Tag:

Tag:

Mobile Version

Mobile Version